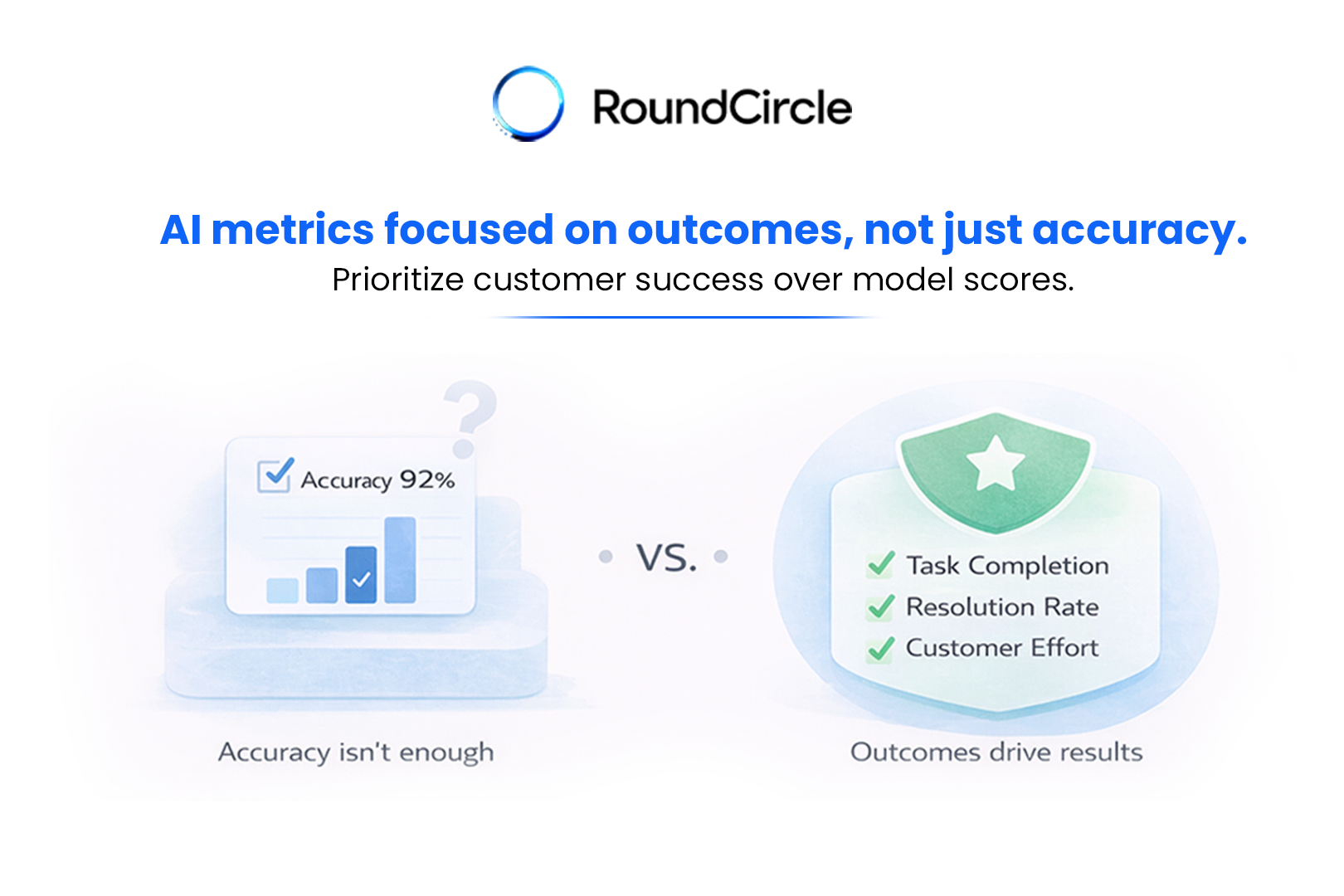

Most teams still measure AI performance with accuracy scores that do not reflect customer experience. Accuracy sounds technical and reliable, but it does not tell you whether a customer solved their problem, completed a task, or understood the next step. Leaders who depend on accuracy alone often believe their system is working even when customers feel confused and unsupported.

Accuracy is a model-focused metric. Customer experience is an outcome-focused discipline. When teams mix the two, they misjudge performance and slow down improvement. This article explains why accuracy is not a useful CX indicator and what metrics leaders should focus on instead.

Why Accuracy Became the Default Metric

Accuracy came from academic benchmarks, not from real customer journeys. It works for comparing language models but not for measuring support quality or commerce outcomes. Accuracy tracks whether a response matches a reference answer. It does not account for:

- intent

- steps

- decisions

- policy rules

- reasoning

- action completion

A model can achieve high accuracy while delivering responses that do not help customers complete their tasks. This gap explains why some teams celebrate benchmark scores while customers continue to struggle.

Myth: Higher Accuracy Means Better CX

Accuracy measures whether the text is correct. Customers judge whether the experience works. These are not the same.

A response can be technically correct and still fail the customer. For example:

- It gives the right information but does not explain the next step

- It answers the question but ignores related concerns

- It explains the policy but does not complete the task

- It looks polished but increases customer effort

Customers do not care about correctness in isolation. They care about progress. Accuracy cannot measure progress.

Reality: CX Success Comes From Task Completion

Task completion is the strongest indicator of whether a system supports the customer. It reflects whether the conversation moved the customer toward a meaningful outcome. Examples include:

- updating an order

- choosing the correct product

- confirming delivery details

- resolving a payment issue

- selecting a subscription plan

Task completion is not about the quality of a sentence. It is about the quality of the outcome. This is why models with high accuracy sometimes perform poorly in real environments. They communicate well but do not drive action.

Why Resolution Rate Is the Strongest CX Signal

Resolution rate tells you whether the system solved the customer’s problem. It reflects the true purpose of support and commerce conversations.

Resolution rate captures real outcomes across journeys such as:

- WISMO

- returns

- account updates

- product discovery

- subscription changes

- delivery exceptions

Teams that measure resolution rate understand what actually happened during the interaction, not just what was written. It connects conversational systems to measurable business goals.

Customer Effort Score: The Experience Metric Accuracy Misses

Even when a conversation ends with the correct answer, the effort required to reach that answer shapes customer sentiment. Customer effort score measures how easy the experience felt.

Effort includes:

- how many steps the customer took

- whether they repeated information

- how long the process felt

- how well the system understood context

- whether the system stayed consistent

Accuracy cannot detect effort. It cannot measure frustration, confusion, or unnecessary repetition. Customers remember how hard something felt, not whether a sentence was correct.

Why EVALs Replace Accuracy in Conversational AI

Teams that depend on accuracy alone miss the complexity of real interactions. EVALs fill this gap by evaluating:

- reasoning quality

- tone alignment

- safety

- policy compliance

- clarity

- step coverage

- multi turn consistency

EVALs help leaders understand how well the system performs in realistic scenarios. They also help identify patterns that accuracy cannot capture. This makes EVALs far more aligned with customer experience than raw accuracy scores.

RoundCircle uses EVAL frameworks to measure the quality of outcomes rather than focusing on model performance alone. This approach helps teams improve reliability and maintain consistent behavior across languages, regions, and channels.

Why Observability Is Necessary for Real CX Metrics

Observability shows how an agent made decisions, what data it used, and where the behavior changed. It explains failures that accuracy cannot detect. Without observability, teams guess why a conversation failed.

Observability helps teams examine:

- the agent’s decision path

- when context was lost

- where the logic broke

- which rules were applied

- when workflows failed

- why the conversation stalled

Industry leaders such as IBM and Microsoft highlight observability as a requirement for reliable agent systems in large enterprises. Observability creates transparency. It helps teams understand how the system behaves inside real customer journeys, not just inside a benchmark environment.

Accuracy cannot explain behavior. Observability can.

How Accuracy Misleads Teams and Slows Progress

Accuracy creates a false sense of confidence. When leaders see high accuracy scores, they assume the system is ready. Customers often discover the opposite.

Common failure patterns include:

- correct answers that do not complete tasks

- correct text that violates business rules

- correct information that confuses the customer

- correct outputs that increase effort

- correct responses that do not follow next steps

Accuracy can rise while customer satisfaction drops. This happens because accuracy measures correctness of text, not correctness of experience.

A Better Metric Stack for Conversational Commerce

A strong conversational system requires metrics that reflect outcomes, not model scores. A better metric stack include:

- task completion

- resolution rate

- customer effort

- escalation rate

- handoff quality

- repeated intent performance

- policy alignment

- failure pattern trends

This stack produces insight that accuracy alone cannot deliver. It connects performance to business goals such as conversion, retention, and customer satisfaction.

How RoundCircle Helps Teams Redesign Their Metric Stack

RoundCircle helps teams shift from model-first metrics to customer-first metrics. This includes:

- custom EVAL systems

- observability layers for full traceability

- journey specific measurements

- dashboards that track resolution and effort

- tools that highlight failures

- testing that reflects real customer paths

These capabilities help teams build conversational systems that behave consistently and produce outcomes leaders can trust.

Next Steps for CX and Commerce Leaders

Teams that depend on accuracy alone miss the metrics that influence customer progress, customer satisfaction, and revenue. Leaders who adopt a task-based approach gain clarity on what actually works and why. This shift creates conversational systems that improve every week rather than remaining static.

To review your metric stack and redesign it for customer success:

Book a demo with RoundCircle to begin your metric transformation.